RSA with CRT Part 2: Implementing Fast RSA

This post is the second in a two-part series about recently implemented optimizations to speed up performance of our open-source, embedded RSA implementation. Read part one here. This post will cover the actual implementation of RSA CRT key generation and modular exponentiation. Our implementation focuses on correctness, maintainability and performance.

This post is the second in a two-part series about recently implemented optimizations to speed up performance of our open-source, embedded RSA implementation. Read part one here.This post will cover the actual implementation of RSA CRT key generation and modular exponentiation. Our implementation focuses on correctness, maintainability and performance.

Now that we have a sense of the math behind RSA CRT, we might wonder what a practical implementation looks like. Besides correctness, real RSA implementations need to contend with practical matters like limited compute resources, long-term maintainability, and certifiability under essential cryptographic standards such as FIPS 140-3.

We’ll cover not only the path to implementing CRT modular exponentiation itself, but also the fine technical details of working with limited memory and high register pressure while remaining performant and avoiding timing side-channels.

While performance is essential for any deployed cryptographic implementation, certifiability is also critical, as standards like FIPS can are often important requirements of commercial opportunities. As such, we’ll highlight the technical nuance in how we maintained FIPS-compatibility while performing this significant optimization.

To get a sense of what such a performant, certifiable, and maintainable implementation might look like, let’s analyze our implementation of the CRT optimization for RSA in depth, starting with the changes to key generation.

CRT Key Generation (or, Working with Too Little Memory)

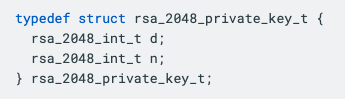

The first step is changing the private key format. In naive RSA implementations, the private keys only need to store the modulus n and the private exponent d, as they just compute ciphertexts/signatures as c = md mod n directly.

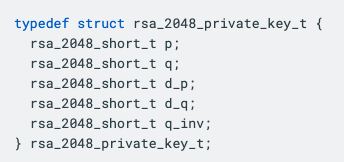

For RSA CRT, we instead work directly with the primes p and q, and need to store the precomputed values dp, dq and qinv. This takes us from a private key format (for RSA-2048) of

to a new CRT format of

Note that we’re now storing five 1024-bit numbers instead of two 2048-bit numbers, so there is an associated 25% size increase to public keys. Since this represents a change in the ABI, as well as an increase in keyblob sizes, it’s essential for changes like this to be made prior to the initial release of a cryptographic library, preventing reverse compatibility breakages that could have severe impacts on users.

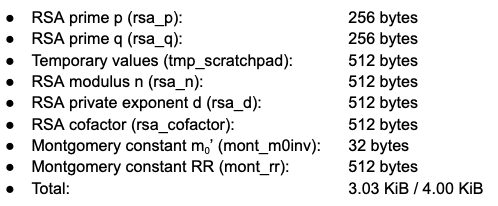

Along with changing the key format, we also need to change the key generation routines to output the values (p, q, d p , d q , q inv ) instead of just (n, d). When writing programs for our asymmetric cryptography accelerator (based on the OpenTitan BigNum implementation), we start by carving up the data memory (“dmem”) into the regions that we’ll be using for storage of different values. Depending on the generation this data memory can be as small as 4 KiB. Previously, the data memory allocation looked like:

(Some parenthetical details: the rsa_cofactor buffer is for inputting a cofactor (either p or q) to reconstruct a RSA keypair; this is handy for importing keys. The mont_m0inv and mont_rr buffers are precomputed constants for Montgomery multiplication, a fast modular multiplication algorithm that helps speed up the generation of primes p and q.)

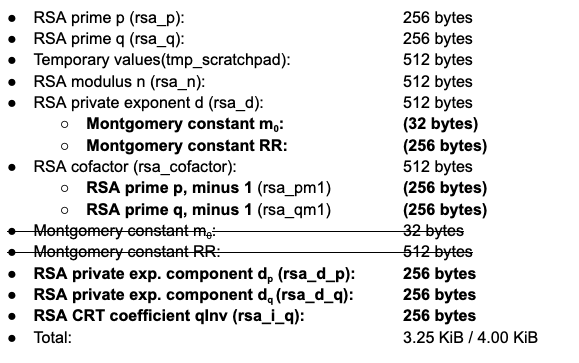

Now that we also need to compute d p , d q and q inv , as well as track other intermediate values, our new dmem memory map looks like (overlapping buffers shown as sub-bullet points)

which just barely keeps the data memory overhead small enough to allow e.g. for the auxiliary buffers that tests use. Note that some buffers are used multiple times; for instance, the Montgomery constant aren’t used after computing p and q, so we can reclaim that space for storing d later in the key generation routine.

The careful eye might catch two lines above that look somewhat silly; why are dedicated buffers rsa_pm1 and rsa_qm1 needed for storing p - 1 and q - 1? The answer is that the least common multiple function overwrites its inputs to reduce dmem overhead. If we just subtracted one from rsa_p and rsa_q in place, as the prior key generation code did, the LCM computation would clobber the primes in each buffer, resulting in garbage values for p and q in the generated private key.

Next, let’s look at the actual changes to the algorithm. The prior key generation approach looked something like

- Generate random primes p and q

- Compute n = p * q

- Compute lcm(p - 1, q - 1)

- Compute d = e-1

mod lcm(p - 1, q - 1)

- We always take e = 65537 here

- Check that d is ‘large enough’, if not, start over

Here, ‘large enough’ for d means large enough to prevent methods like Wiener’s attack or the Boneh-Durfree attack from recovering the private key. The latter attack works for d < n0.292 , so for safety, NIST SP 800-56B–necessary for FIPS certification–requires that d should be at least half the RSA size in bits.

To convert the above to CRT, we now have to add steps for computing our additional values. We also move our computation of n to the end to allow use of rsa_n as an additional working buffer when computing dp and dq; this has the nice side effect of shaving a few cycles when d isn’t sufficiently large the first time.

- Generate random primes p and q

Compute n = p * q- Compute (p - 1) * (q - 1)

- Compute d = e-1 mod lcm(p - 1, q - 1)

- We always take e = 65537 here

- Check that d is ‘large enough’, if not, start over

- Compute dp = d mod p - 1

- Compute dq = d mod q - 1

- Compute qinv = q-1 mod p

- Compute n = p * q

The computation of dp and dq are straightforward, but computing modular inverses can be tricky to do securely. One thing to watch out for is that the runtime of the modular inverse doesn’t depend on the inputs; otherwise, an attacker could time how long the algorithm takes to run, and leak bits of the key. While this sounds highly theoretical, it was actually the basis of the recent EUCLEAK attack which allows unauthorized cloning of smartcards and FIDO keys.

In our implementation, we make use of the constant-time binary extended Euclidean algorithm (EEA) from BoringSSL, as formally verified by David Benjamin in the fiat-crypto project. Rather than try to perform long division each step like in the typical extended Euclidean algorithm, this binary EEA shuffles intermediate values around to only require division by two, which can be performed with a simple bit shift.

Like the traditional EEA, however, the binary EEA does require a lot of memory. For everything to fit properly in dmem, we make extensive use of not-in-use buffers as scratch space for computations. For instance, when computing qInv with the EEA, we use all of tmp_scratchpad, rsa_d, and rsa_cofactor for a cumulative 1.25 KiB of scratch space.

As a last wistful note, the particularly keen reader might wonder whether we could get away without computing d = e-1 mod lcm(p - 1, q - 1); after all, we immediately reduce it mod p - 1 and q - 1, so it should suffice to compute dp = e-1 mod p - 1 and dq = e-1 mod q - 1 directly.

While this would be a nice trick, we do need to check that d is sufficiently large as part of FIPS compliance, and there’s no practical way short of computing d to determine this. The CRT does guarantee that given d mod p - 1 = dp and d mod q - 1 = dq we can recover a unique d modulo lcm(p - 1, q - 1); this is left as an exercise to the reader (hint: apply CRT to p - 1 and (q - 1) / gcd(p - 1, q - 1)) and suggests the following possible approach:

- …

- Compute dp = e-1 mod p - 1

- Compute dq = e-1 mod q - 1

- Compute k = (q - 1) / gcd(p - 1, q - 1)

- Recover d from dp, dq mod k

- Check that d is ‘large enough’

- …

but to our knowledge this hasn’t shown up in practice, as it’s more than likely the overhead from the computation of k and CRT reconstruction outweighs any benefits.

CRT Modular Exponentiation (or, Working with Too Few Registers)

When running the CRT modexp, we expand from a simple call to the square-and-multiply routine to the following:

- Compute mp = m mod p

- Compute cp = (mp)^(dp) mod p (square-and-multiply)

- Compute mq = m mod q

- Compute cq = (mq)^(dq) mod q (square-and-multiply)

- Compute c = q * ((cp - cq mod p) * qinv mod p) + cq

While this looks like a fairly straightforward series of operations, first note that we’ve rearranged mq to only be computed once cp is complete; this is because we don’t have sufficient memory to store mq across the first square-and-multiply call. In fact, the result of the modular exponent overwrites the buffer storing of dp, as we need every other bit of buffer space to compute cq after and dp isn’t used again.

Fortunately, once we reach the CRT reconstruction step, a number of buffers free up for use. Things become fairly straightforward, and we use a simple conditional addition and binary long division for the last two reductions mod p. (We certainly could modify the square-and-multiply function to output cp and cq in Montgomery form, but for simplicity we simply do these last two operations outside the Montgomery domain.)

Memory space isn’t the only limited resource when writing assembly for embedded targets like this cryptographic accelerator; the other difficulty can be running out of available registers.

The accelerator has 32 32-bit general purpose registers available, and when running the existing modexp routine, 20+ of those get overwritten with working values. On top of this, the CRT modexp routine needs to take in 12 input registers containing pointers to memory for output. At first glance, this seems to be a hopeless situation: we don’t have enough registers for inputs and working values together.

This is a situation that compilers run into frequently. When you compile a program from a higher-level language and the number of registers needed approaches the number of total registers, it can be difficult to assign values to registers in a way that keeps the values around for long enough. This phenomenon is called “register pressure,” and the process of assigning values to registers is called “register allocation”; higher register pressure makes register allocation difficult to impossible.

To address this, compilers will typically handle high register pressure with “spilling,” where the compiler inserts instructions to stash some register values in memory, loading the values back into registers once they’re needed. This isn’t ideal though, as it incurs a performance penalty and may expose sensitive values if attackers can snoop on the memory bus.

Fortunately, when hand-writing assembly, one can often avoid spilling to memory by a bit of register gymnastics. By shuffling values out of registers which get overwritten (or “clobbered”) before a section of code, and then restoring them as needed, we can get the correct values where we need them without incurring a significant performance hit.

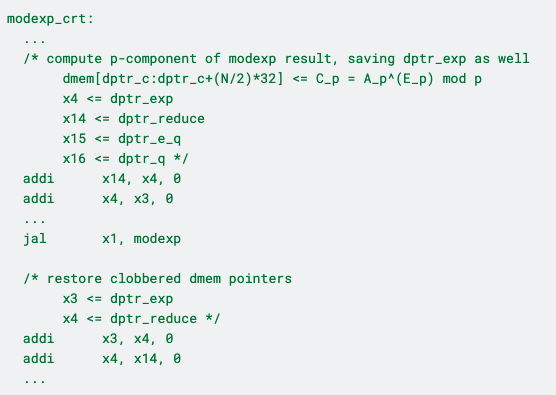

As an example, here’s where we compute cp = c mod p by calling the pre-existing square-and-multiply algorithm:

Here’s the same routine in pseudocode, for simplicity:

Copy register x4 -> x14

Copy register x3 -> x4

…

Call square-and-multiply routine

Copy register x4 -> x3

Copy register x14 -> x4

Here, the register x14 is used as an input to modexp containing the pointers to the base, exponent, and modulus respectively. x3 and x4 contain pointers to important working buffers, and modexp clobbers 20+ registers, including x3.

At this point in the program, there aren’t any remaining registers which are left over to hold the value in x3. Fortunately, modexp doesn’t overwrite the input register x14, so we can actually use x4 as storage, retrieving its value after the function call. In other words, we shuffle

x14 <= x4 <= x3,

call the buffer, and then shuffle back the values as

x3 <= x4 <= x14

restoring x3 and x4 in a situation where it looked like we were out of registers. Because the register pressure is so high throughout the bulk of the modular exponentiation routine, this required precise attention to exactly how registers could be rearranged to avoid spilling.

Security and Maintenance Considerations (or, Working with Too Many Details)

To keep such code maintainable as well as performant, we carefully document the values stored in each register throughout the code, and at the top of each method maintain

a list of input and output registers, including buffer sizes

a list of all registers clobbered by the routine

a list of all CPU flags potentially overwritten

as well as any additional function-specific details needed by a caller. For instance, at the top of the modular inversion routine used to compute qinv = q-1 mod p, we have the following doc comment:

which informs a caller that any values in registers x2 to x6, x31, or w20 to w26 should be moved elsewhere before calling. Part of maintaining assembly for a cryptographic coprocessor (and frankly, any cryptographic code!) is meticulously ensuring that documentation like this is kept up to date. One future task we’d like to tackle is adding further linting checks to ensure such information keeps in sync with the code.

Automated checks can be invaluable in developing software, especially when security is a concern. As an example, a basic mechanism was built into the legacy codebase for verifying that a provided function is constant time, guaranteeing safety from simple timing attacks.

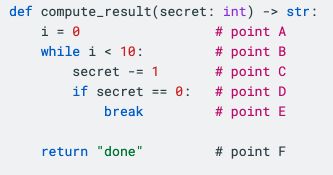

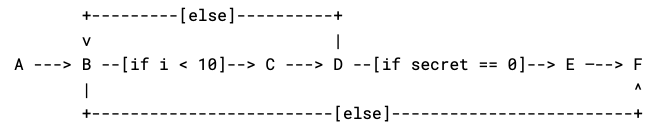

One approach to check whether a function is constant time is to run it on a bunch of random inputs and compare the number of clock cycles each time. While this can be useful for proving a function isn’t constant time, it can’t prove a function is constant time unless it ends up trying every single possible input. Instead, the aforementioned mechanism doesn’t run the code at all: it scans the assembly itself and builds out a graph of the control flow of the program, inspecting what makes the program flow from one node to the next.

As an example, a toy Python program like

would have a control flow graph of

Each point in this graph is a commented point in the program above, and each edge is a part of the program that could run next. If there’s multiple places a program could go (e.g. an if statement), we label the edge with the condition for it being taken.

By inspecting this graph, we can see that there is one edge that depends on the secret value we provide our function. This corresponds to the if secret == 0: break part of the program, where we exit the loop early if our secret value becomes 0. Indeed, if our secret value is any integer from 1 to 10, then the loop will exit early and an attacker timing the function would be able to detect this. The constant-time check script uses this principle to show that functions are constant time as part of the standard test suite. To allow testing alongside all the other crypto tests, a otbn_consttime_test Bazel rule allows for detailing exactly which routines and sensitive values to trace on every test suite run.

Conclusion

Cryptography can be delightfully infuriating in the way that a cryptosystem expressed in just a few lines of math can require thousands of lines of careful, reasoned code changes to implement properly. Details like performance, maintainability, side channel protections, backwards compatibility, memory and register limitations, and testing implications all must be front-of-mind for the engineer when implementing these systems.

Fortunately, with a combination of careful resource utilization, detailed documentation, clever tooling, and a bit of perseverance, we can find ways to sustainably squeeze out additional performance in even the most resource-constrained systems.

If you’re interested in learning more about our approach to maintainable cryptography, or how ZeroRISC is democratizing access to secure silicon, sign up for our early-access program or drop us a line at info@zerorisc.com.

RSA with CRT Part 1: Speed up RSA by 3x with This One Simple Trick

This post is the first of a two-part series describing recent improvements to make our embedded RSA software (originally developed in the OpenTitan codebase) production ready, leading to a huge speedup for core operations.

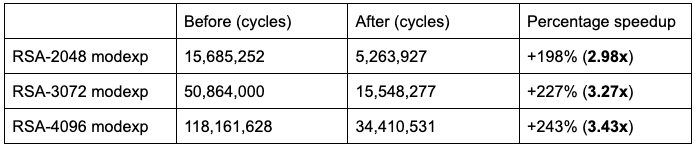

This post is the first of a two-part series describing recent improvements to make our embedded RSA software (originally developed in the OpenTitan codebase) production ready, leading to a huge speedup for core operations:

Note: This is the full-length modular exponentiation that forms the core of signing and decryption operations in the RSA cryptosystem. Top-level speedups for signing and decryption are similar.

In this post, we’ll go over the mathematical basis for this standard optimization. In the second part, we’ll go deeper into implementation details and share what a major, specialized, pure-assembly code change looks like in practice.

The Chinese Remainder Theorem

The technique used is ancient, originating in the 3rd century work of mathematician Sun Zi, with a complete theorem dating back to 1247. It states that, if a set of integer moduli n0…nk-1 is pairwise coprime (meaning that the GCD of any pair is 1), then any set of residues a0…ak-1 from those moduli has a solution x such that x is equivalent to every ai modulo ni, and furthermore that the solution x is unique modulo N = n0 * … * nk-1.

This theorem enables us to translate a problem modulo N into a problem modulo factors of N, without introducing ambiguity or multiple solutions. Operations like multiplication modulo large numbers scale more than linearly with the bit-length of the modulus, so doing an operation twice modulo two half-size factors is significantly faster than doing the same operation once with one full-sized value. This is the fundamental property we used to speed up RSA – a standard technique today, but non-trivial to implement in new cryptographic libraries.

The RSA Cryptosystem

RSA was first described in 1977 and is one of the first cryptographic schemes that could operate using separate public and private keys (as opposed to requiring that the people communicating with each other have a previously shared secret key). It’s one of the oldest algorithms that’s still considered secure and in widespread use, whose remarkable endurance can be attributed to its elegant mathematical simplicity.

The core of the algorithm fits in just a few lines (eliding a couple of details, see IETF RFC 8017 for a more formal and authoritative description):

- Choose a fixed, public exponent e. It can be anything, but popular choices for efficiency include 3 and 65537.

- Randomly generate two prime numbers p and q. Their product, n = p * q, combined with the exponent e, is your public key. The bit-length of n determines the “RSA size”; RSA-2048 means that p and q are 1024 bits each and n is 2048 bits.

- Compute the inverse of e modulo lcm(p - 1, q - 1); that is, find a number d such that e * d mod lcm(p - 1, q - 1) = 1. This integer, d, is your private key. Without knowing p and q, it’s not feasible for someone else to compute it.

- To encrypt a message, add padding according to your chosen scheme (e.g.OAEP) to get an integer m representing the message, and compute the ciphertext c = me mod n.

- To decrypt the message, the recipient computes m = cd mod n.

This works because (me)d mod n = med mod n, and ed mod lcm(p - 1, q - 1) = 1. Therefore, med is congruent to mk * lcm(p - 1, q - 1) + 1 modulo n, p, and q. By Fermat’s Little Theorem, we know that since p and q are prime, any number raised to a multiple of (p-1) is equivalent to 1 modulo p, and similarly any number raised to a multiple of (q-1) is equivalent to 1 modulo q. We can therefore cancel out the k * lcm(p - 1, q - 1) factor of the exponent both modulo p and modulo q, and conclude that med is equivalent to m modulo p and also modulo q. By the Chinese Remainder Theorem (hello again!) we know that this means med mod n = m, the original message.

This scheme works for signatures too. An RSA signature uses the same underlying modular operations as encryption; to sign a message, the signer creates a signature s = md mod n and the verifier checks it by computing se mod n and checking that the result is equivalent to the message. Essentially, signing uses the same operation as decryption, and verifying uses the same operation as encryption.

Of the RSA operations:

Encryption and verification (exponentiation with e) is generally very fast, since e is public and small.

Signing and decryption (exponentiation with d) are usually much slower, since d is large and must be kept secret.

Key generation is usually extremely slow compared to either of these, and especially compared to more modern public-key schemes based on elliptic curves. Picking random primes is not easy!

RSA with CRT

The above description of RSA is mathematically accurate, and it’s what our initial implementation in the prior codebase computed. However, if you look at RFC 8017 or most performant RSA implementations, you’ll see a lot of references to “CRT” and numbers like dp and dq that don’t appear here. This is because most production-quality implementations use the Chinese Remainder Theorem (CRT) to translate expensive RSA operations mod N into operations modulo p and q.

Here, we’ll dive into the high-level mathematical description of implementing RSA with CRT. In the next blog post, we’ll describe implementing each part of this description on an asymmetric cryptography acceleration, the critical part of a hardware root of trust which handles performing cryptography such as RSA.

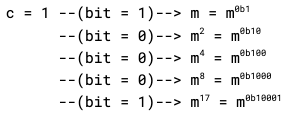

First, let’s review how one might directly compute an RSA signature, i.e. calculate c = md mod n. The standard approach is the “square-and-multiply” algorithm, which reads the binary representation of d bit by bit to compute md mod n. Here’s what it looks like in practice:

- Start with c = 1.

- Read out each bit of the exponent d, left to right:

- If the bit is 0, replace c with c2 mod n

- If the bit is 1, replace c with c2 * m mod n

To see why this works, take d = 17. In binary, d = 0b10001. Reading the bits of d left to right, our result becomes (dropping the “mod n” in each line for clarity)

Every time we see a ‘0’, we square our result, which doubles the exponent, which in turn puts a binary ‘0’ on the end of the exponent. Every time we see a ‘1’, we square our result and multiply by m, which doubles and adds one to the exponent, putting a binary ‘1’ there instead. Bit by bit, we build out our exponent.

While this modular exponentiation (or “modexp”) algorithm is perfectly functional, its runtime scales by the cube of the bitlength of its inputs. As such, we’d rather make use of this modexp algorithm mod p and mod q, instead of mod n.

Here’s what a divide and conquer RSA with CRT approach might look like instead:

- [Divide] Compute mp = m mod p and mq = m mod q

- [Conquer] Calculate cp = (mp)d mod p and cq = (mq)d mod q (using square-and-multiply)

- [Reconstruct] Use cp and cq to recover the unique c where c mod p = cp and c mod q = cq

This will certainly speed things up, but we can do even better. Recall that by Fermat’s Little Theorem, any number raised to the power of (p-1) mod p is equivalent to 1 mod p. This means that, if we write d as d = (d mod (p - 1)) + k(p - 1) for some k, we can just cancel out the k(p - 1) when performing our modexp. This means we can rewrite the algorithm as

- …

- [Conquer] Calculate cp = (mp)d mod (p - 1) mod p and cq = (mq)d mod (q - 1) mod q

- …

which halves the time it takes to compute cp and cq with our square-and-multiply algorithm, since there are now half as many bits in our exponent.

To complete our RSA CRT algorithm, let’s think about how we’d actually implement each step. The first step, labeled “[Divide]” above, can be done using simple modular reduction: we can just perform long division and take the remainder. The ‘conquer’ step can be done using our square-and-multiply algorithm. What about the reconstruction step? The CRT guarantees us a unique c with c mod p = cp and c mod q = cq, but it doesn’t provide us an explicit way to recover that c.

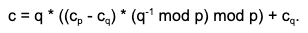

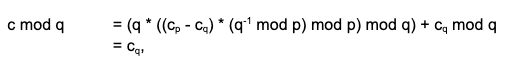

Fortunately, there is an explicit formula we can use: Garner’s formula. Given cp and cq, we can recover c as

To see why this formula works, we manually compute

and

just as we hoped.

While most of this formula is addition and multiplication mod q, there is the need to compute q-1 mod p, which is a bit more expensive. Fortunately, this value doesn’t depend on our base or exponent, just the cofactors of n, so we can precompute it along with dp = d mod (p - 1) and dq = d mod (q - 1) when we generate our RSA keys.

At last, our complete version of the RSA CRT modexp algorithm is (changes in bold):

- [Preconditions] Assume we’ve already precomputed dp = d mod (p - 1), dq = d mod (q - 1), and qinv = q -1 mod p.

- [Divide] Compute mp = m mod p and mq = m mod q

- [Conquer] Calculate cp = (mp )^(dp) mod p and cq = (mq )^(dq) mod q, using the square-and-multiply algorithm

- [Reconstruct] Recover c = q * ((cp- cq ) * qinv mod p) + cq as the result

For a performance estimate, we note that the runtime in practice is dominated by the two modular exponentiations in the ‘conquer’ step. Since p and q are half the size of n, the two modexp calls together should take 2 * (1/2) 3 = 1/4 of the time of the direct approach, giving an upper bound of a 4x speedup. As the performance table at the top of this post shows, we can quickly approach this in practice for large RSA sizes where the exponentiations mod p and q dominate the runtime.

That’s all for this installment, but stay tuned for part 2 to hear how these mathematical changes transform the code itself!

If you’re interested in learning more about our approach to maintainable, production-grade cryptography, or hearing about ZeroRISC in general, sign up for our early-access program or reach out at info@zerorisc.com.

Tidying Up: FIPS-Compliant Secure Zeroization for OTP

This blog post covers ZeroRISC’s recent contributions implementing one-time programmable (OTP) memory zeroization to achieve FIPS 140-3 compliance. We did this in partnership with Rivos Inc, using the code at git hash 032df24, as part of our commitment to aligning open silicon with important industry security standards. A future post will describe how we’re advancing that mission further via the GlobalPlatform organization’s Trusted Open Silicon Task Force.

This blog post covers ZeroRISC’s recent contributions implementing one-time programmable (OTP) memory zeroization to achieve FIPS 140-3 compliance. We did this in partnership with Rivos Inc, using the code at git hash 032df24, as part of our commitment to aligning open silicon with important industry security standards. A future post will describe how we’re advancing that mission further via the GlobalPlatform organization’s Trusted Open Silicon Task Force.

In this technical post, we’ll address what the FIPS 140-3 requirements for zeroization are, how we improved the prior OTP design to meet these requirements, and what the hardware verification process looked like.

Zeroization and FIPS 140-3: Erasing Secrets Safely

There are many ways a secure device can reach its end of life, including planned decommissioning of a functioning device or return of a defective one. Regardless of context, it’s critical that no cryptographic secrets remain on a device once it’s taken out of service and changes hands.

The typical process for this is zeroization: at a device’s end of life, a special routine is run on the device to zero out all sensitive regions of OTP. In practice, this means blowing all the remaining OTP fuses, leaving the memory cleared.

While this may sound like a straightforward operation, there are a number of details that make zeroization tricky to implement:

Blowing fuses requires a lot of charge, so fuses need to be blown word-by-word

Due to manufacturing variations, a small fraction of OTP fuses may simply not blow

If power loss happens during zeroization, the device may be in an in-between state where some secrets are still accessible but others aren’t

The device must be able to reliably determine whether zeroization completed

As such, zeroization is a good target for standardization. FIPS 140-3 dictates a number of requirements regarding zeroization, summarized in the Implementation Guidance for FIPS 140-3 sections 9.7.A and B. At a high level, these state that zeroization…

…must overwrite all secrets with 0s, 1s, or random data (assertion AS09.30).

…must inform the device to treat zeroized values as inaccessible (assertion AS09.29).

…must erase any integrity values like checksums for secrets (assertion AS09.28).

…must somehow indicate a completion status to a user (assertion AS09.33).

It is important to note that FIPS 140-3 extends the prior zeroization requirements of critical security parameters (CSPs) to also include all unprotected sensitive security parameters (SSPs), regardless of their security level, along with public keys. To see what meeting these requirements looks like in practice, let’s review our approach to OTP zeroization.

Practical Zeroization: How to Tidy Up OTP

As a first practical detail, we need to consider which parts of OTP should be zeroized in the first place. In order to store different kinds of device state, the OTP is split up into partitions, some of which contain secrets that should be zeroized, and some of which contain data that shouldn’t.

One example is the lifecycle partition, which records whether a chip has been deployed or if it’s been returned to manufacturer. Since the chip relies on this partition’s data to determine what should be accessible, zeroizing this partition would render a chip unusable.

As such, we add a zeroizable control field to the memory map for the OTP partitions; by changing this flag, the hardware enforces whether or not the zeroization mechanism can clear that partition:

During generation of the OTP RTL, we check this field when generating partition offsets, allocating a 64-bit field after the digest indicating whether zeroization has happened. Each zeroizable OTP region then looks like

where the last 64 bits can be all set to 1 (a blown fuse) to indicate that the partition has been zeroized.

To address the possibility of “stuck at zero” fuses during zeroization, we use a dedicated integrator-tunable parameter for what fraction of bits correctly cleared indicates a proper zeroization. If the OTP controller detects say >90% of fuses blown in the zeroization marker, then it’s reasonable to consider the partition truly zeroized.

For triggering zeroization itself, the OTP’s existing Direct Access Interface (DAI) is used with the addition of a new command field. By setting the ZEROIZE bit in DIRECT_ACCESS_CMD register, the OTP controller will zeroize values at a provided address and then return the number of fuses which actually ended up blown. By sequentially clearing each zeroizable OTP field using this DAI interface, a dedicated zeroization application can clear all secrets from the chip without having to worry about manually tracking zeroization markers.

As a last detail, it may seem inconsequential whether we set the zeroization marker for a partition before clearing the partition or after, as by the end of zeroization the result is the same. It turns out that this ordering is actually important both to security and certifiability: by setting the marker before clearing the partition, we prevent power loss mid-zeroization from leaving partially-zeroized partitions marked as usable by the processor.

Checking our Work: Verifying our Zeroization Logic

By the time OTP zeroization happens, a device could be in any number of states. As part of implementing OTP zeroization, we need to be certain that whatever state the device is in before zeroization, it is both deterministically usable/non-usable per operator policy and properly zeroized afterward.

To do this, we took a pure Universal Verification Methodology (UVM) approach with constrained-random stimulus, targeted sequences, and comprehensive functional coverage. For a review of UVM, there are many excellent guides online including this one from ChipVerify.

How We Verified It: A Config-Aware UVM DV Strategy

The aim of this effort was two-fold: to ensure that zeroization works regardless of state, and that even under adverse conditions, the OTP is left in a functioning state. As such, two key virtual sequences were introduced:

otp_ctrl_zeroization_vseq.sv, which triggers zeroization under stress (DAI, CSR access)

otp_ctrl_zeroization_with_checks_vseq.sv, which adds post-zeroization integrity and access checks

To ensure coverage across various chip states, these sequences randomly select:

Target OTP partition (vendor, creator, etc.)

Partition offset (start, middle, digest, or zeroization trigger address)

Whether the partition is zeroizable or not

Additionally, the scoreboard and monitor were enhanced to

Track pre-zeroization state per partition

Verify post-zeroization expected values (zeroized -> all 1s, non-zeroized -> unchanged)

Detect illegal reads from secret partitions (even if data is zeroized)

Using these changes, we can ensure that the OTP zeroization mechanism has been tested for every possible combination of factors that it could encounter in the real world.

Functional Coverage: Closing the Loop

To ensure coverage, we added two config-aware covergroups to ensure 100% scenario closure.

The first is zr_dai_cmd_cg, which handles zeroization DAI command coverage. This covergroup samples every DAI access during and after zeroization with the following dimensions:

The second, complementary covergroup is zr_partition_read_cg, which handles post-zeroization read behavior by cross-covering five dimensions for each partition:

Config-Driven DV: One Testbench, Any OTP Layout

In order to make this testing framework maintainable, it needs to adapt to any changes to the OTP layout. As such, we made the testbench configuration-driven: any changes to the OTP memory map are reflected in the virtual sequences, scoreboard, and covergroups. Upon building the testbench, the HJSON OTP memory map is parsed and the resulting partitions are used to fill in Mako templates for the testbench source files:

Using this approach, the test bench is able to immediately adapt to updates to the OTP memory map, ensuring that what’s tested is exactly in sync with what will be taped out.

Result: Provable Zeroization

Together, these elements of our testbench allow us to continuously ensure that the OTP zeroization mechanism works exactly as intended. Below is a table of the properties we were able to verify regarding this mechanism:

Because of the configuration-driven design of the test bench, we are able to continuously verify these properties for any integration of the OTP controller.

Conclusion

When working with secure systems, conceptually simple tasks such as OTP zeroization can require a remarkable degree of care and effort to incorporate properly. Practical security considerations, standards compliance, and maintainability all need to play a part in engineering design choices, and rigorous digital verification is essential.

If you’re interested in learning more about ZeroRISC and our open silicon approach to device integrity, drop us a note at info@zerorisc.com.

Bridging Hardware and Software: Summer Interns Making an Impact at ZeroRISC

This blog post is the second in our two-part summer internship series. Following Beshr’s great work on porting Enabling Position-Independent Code and Dynamic Memory Management in ZeroRISC OS, Yeabsira tackled porting OpenTitan’s interconnect from TL-UL to AXI.

This blog post is the second in our two-part summer internship series. Following Beshr’s great work on porting Enabling Position-Independent Code and Dynamic Memory Management in ZeroRISC OS, Yeabsira tackled porting OpenTitan’s interconnect from TL-UL to AXI.

Introduction

Yeabsira is a rising senior at MIT studying Electrical Engineering & Computer Science, with a passion for the hardware stack - from digital systems and chip design to all things low-level. When not engineering, he can be found kickboxing, laying down jazz grooves on his bass guitar, or tinkering with audio circuits.

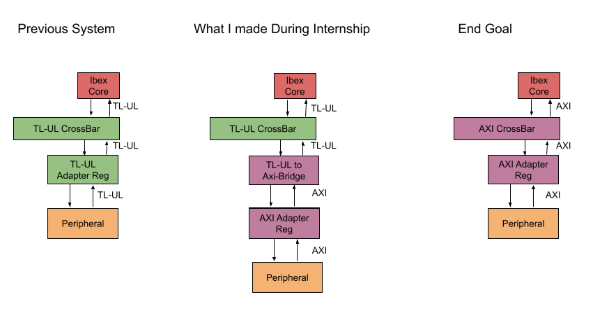

Extending OpenTitan to AXI

My project focused on porting OpenTitan to AXI. OpenTitan’s interconnect is currently TL-UL (TileLink Uncached Light-Weight), which works well. However, many common IP blocks, debug probes, and FPGA tools use AXI. Generating AXI-compatible IP allows straightforward integration with AXI-enabled designs and leverages existing AXI-based DV collateral to enhance verification. My goal was to start an incremental conversion to AXI, beginning with AXI-based register interface transactions while preserving the TL-UL interconnect.

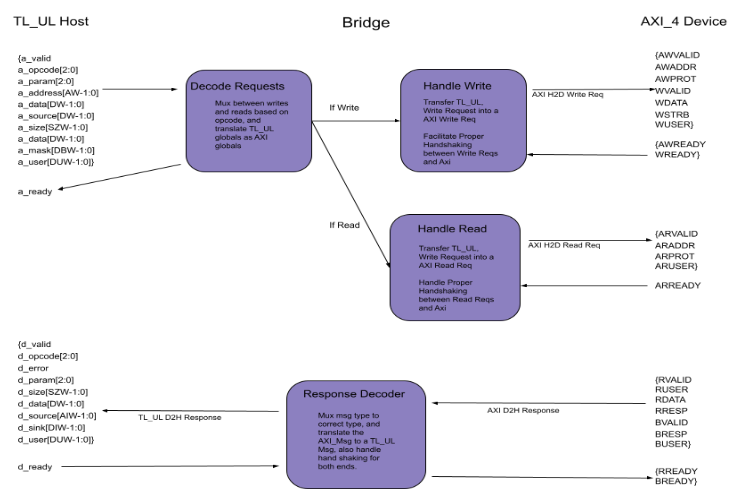

I developed two key RTL components.

The first is a TL-UL to AXI bridge, which repackages TL-UL host-to-device (H2D) requests into AXI and AXI’s device-to-host (D2H) responses back into TL-UL D2H responses.

The second is the AXI AdapterReg, replicating TL-UL AdapterReg functionality. This module translates TL-UL H2D requests into peripheral CSR read/write operations and returns results as TL-UL D2H responses, providing a clean communication path. The AXI AdapterReg maintains the CSR handshake while exposing an AXI D2H interface upstream, allowing AXI hosts to communicate directly with peripherals. By replacing TL-UL AdapterReg with the bridge + AXI AdapterReg, full DV regression can be rerun, supporting functional equivalence and incremental migration to AXI-native IP.

The simplified diagram below illustrates the implementation and how a fully AXI-enabled open silicon blocks could function in the future.

The Bridge

Understanding the bridge requires comparing TL-UL and AXI. TL-UL has two channels: A_Channel for requests and D_Channel for responses. Signals are shared between reads and writes, with A_Opcode indicating request type. OpenTitan-derived IP adds user bits for response integrity.

AXI uses five separate channels: AW (write addresses), W (write data), AR (read addresses), R (read responses), and B (write responses). Each has its own handshake, so signals are not shared between reads and writes.

I implemented flip-flop registers to capture TL-UL input signals during handshakes. Based on A_Opcode, requests are mapped to the appropriate AXI channels. AXI D2H responses are then routed back to TL-UL D2H outputs according to the registered request type.

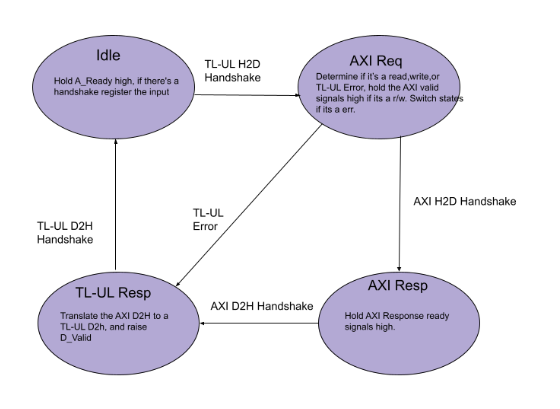

A notable challenge involved handling AW and W handshakes, which can occur independently. The solution was a state machine tracking AW and W, waiting for the other handshake when only one occurs. Currently, the bridge supports a single inflight request at a time, ensuring clear and reliable state transitions. The diagram and accompanying state machine illustrate the timing and toggling of each signal.

AXI AdapterReg

The AXI AdapterReg sits in front of each peripheral, translating AXI transactions into CSR accesses and returning AXI responses. It can also be integrated into the current TL-UL-based system via the bridge.

Its scope is narrower than the bridge, converting AXI’s split channels into a single CSR-side handshake. The CSR interface asserts we_o for writes, re_o for reads, passes address, data, and byte enables, and expects rdata_i or error_i. Only one inflight request is supported, so responses are immediately matched, and AXI ready signals remain low until completion.

Error handling was a critical focus. Differences between TL-UL and AXI can introduce cycle delays or missed errors. The AdapterReg and bridge communicate via an error_i/error_o handshake to ensure detected errors propagate correctly and prevent unhandled system failures.

Testing

Approximately 30% of my effort went into RTL design, and 70% into testing and debugging. Iterative simulation is crucial, as FPGA iteration is slow. I validated the design first in Verilator, then on FPGA, and finally in the full-chip environment, resolving major issues beforehand.

Tests revealed response integrity mismatches, cycle delays in error propagation, address alignment issues, AXI handshake quirks, and the need for the AW/W state machine. Each failed test guided design improvements, producing a robust bridge and AdapterReg ready for full-chip integration.

Impact and Benefits

My bridge and AXI AdapterReg now pass top-level DV regression and are being prepared for PR to main. Enabling AXI support allows for easier integration into more commercial silicon. Future work includes an AXI Host AdapterReg, enabling common open source RISC-V cores like Ibex to communicate with an AXI interconnect.

Beyond technical skills, I gained experience designing a system from scratch, integrating it into a larger chip, debugging complex issues, and understanding UVM-driven verification workflows. Collaboration with full-time engineers accelerated learning and allowed project completion, highlighting the value of curiosity, persistence, and hands-on problem-solving in digital systems engineering.

My experience demonstrates how early involvement in substantial RTL projects at ZeroRISC provides exposure to real-world digital systems challenges, making it an ideal environment for aspiring engineers seeking meaningful and technically rich work.

Conclusion

Yeabsira’s time at ZeroRISC shows how internships offer meaningful, hands-on exposure to real-world digital systems challenges. From designing and testing complex RTL components to understanding verification flows and system-level integration, interns leave with practical skills and insights that are hard to gain elsewhere. Stay tuned for future updates on our AXI work, and an announcement of its full open source release.

We’re proud of our interns' contributions and grateful for the fresh ideas and energy they brought to ZeroRISC this past summer. Their work not only advances our projects but also demonstrates the kind of learning, growth, and impact our internship program offers.

If you’re interested in learning more about our internship program or exploring collaboration opportunities with ZeroRISC, please visit our Career page.

ZeroRISC’s Summer Interns Expand Runtime Flexibility of our SecureOS

This Summer, ZeroRISC had the pleasure of hosting two Summer interns in our Boston office. This blog post is the first in a two-part series highlighting their work tackling challenging real-world problems at the intersection of hardware and software.

This Summer, ZeroRISC had the pleasure of hosting two Summer interns in our Boston office. This blog post is the first in a two-part series highlighting their work tackling challenging real-world problems at the intersection of hardware and software.

Beshr Bouli, this post's primary author, is a rising junior at MIT majoring in Computer Science and Mathematics. He’s interested in both low-level systems like OS’s and compilers and large-scale systems like distributed networks. He’s a dedicated competitive programmer outside the classroom and an avid soccer enthusiast who plays, watches, and organizes the game with equal passion.

His project focused on enabling Position-Independent Code (PIC) and implementing dynamic memory management in ZeroRISC’s Rust-based secure operating system, built on top of TockOS, allowing multi-application execution on MMU-less systems with greater runtime flexibility and improved memory efficiency.

The following is Beshr’s report on his Summer internship with us.

Enabling Position-Independent Code and Dynamic Memory Management

As part of our full-stack philosophy, ZeroRISC supports a Rust-based embedded OS co-developed atop OpenTitan hardware – extending hardware security guarantees into the software. A key feature of this OS is its ability to load and run multiple applications simultaneously. Since OpenTitan does not provide a Memory Management Unit (MMU), the OS cannot assign a virtual address space to each process. While this is not a security concern due to the use of a Memory Protection Unit (MPU), it forces all processes to share the same address space. My internship this Summer focused on increasing flexibility around this memory bottleneck by integrating Position-Independent Code (PIC) and making other core OS enhancements.

Below is a simplified system overview showing all the components my project involved:

Position-Independent Code (PIC)

Compilers typically generate code assuming exclusive control over memory, placing code and data at fixed addresses. On MMU-based systems, the MMU handles virtual-to-physical address translation, but MMU-less systems must handle memory differently. Previously, applications were assigned fixed addresses at compile time, for example, Application A at 0x21000 and Application B at 0x22000. This static allocation leads to memory inefficiency, fragmentation, and difficulty accommodating applications that grow or are unknown at compile time.

I implemented a comprehensive pipeline from compiler to loader, producing binaries that do not assume specific RAM addresses. The focus was on making C applications built with the Clang LLVM-based toolchain position-independent.

Compiler

In my pipeline, the compiler now generates assembly that uses program counter (PC)-relative addressing for code instead of absolute addresses. Note that the PIC memory accesses remain valid regardless of where the binary is loaded, because they are relative rather than absolute. For example:

However, this isn’t the complete solution. Binaries consist of two independently relocatable segments: the code segment, which is backed by read-only memory, and the data segment, which is read-write and located on SRAM. PC-relative addressing works for code segments stored in read-only Flash, but the data segment is loaded dynamically into SRAM, so its addresses are unknown at compile time. This is solved using global pointer (GP)-relative addressing, allowing data to be accessed relative to the GP, which is set at load time. For example:

Other ELF segments are mapped to PIC segments based on read/write permissions, with constants typically in the code segment and .bss in the data segment.

Linker

The linker finalizes the locations of objects whose placement is unknown at compile time. For example, in C code with externally defined constants, the linker rewrites the compiler’s pseudoinstructions to ensure that the correct relocation sequences are applied depending on whether objects reside in read-only or writable memory.

Loader and Final Relocation

Now that we have assembly code that doesn’t make assumptions about addresses, we need to appropriately set up the environment for the assembly code to run in. Specifically, I modified the C runtime (crt0) to perform runtime initialization and relocation. Code segments remain in Flash, while data segments are loaded into SRAM. The loader sets GP after loading the data segment and finalizes relocation for variables requiring absolute addresses, including constant pointers that are mapped into SRAM to allow updates. Finally, the PC is set to the application entry point, achieving fully position-independent execution.

Memory Management

With PIC implemented, memory can now be managed more flexibly. Previously, applications had fixed memory requirements, which limited dynamic allocation and overall system efficiency. Beshr designed a variable-size-block memory allocator to optimize usage.

Each memory block contains a header with size and allocation status, forming a linked list. For PIC allocations, the allocator uses a best-fit strategy; for non-PIC allocations, it checks that the requested address and size fit within a free block. Blocks can be split to minimize wasted memory, and deallocation coalesces adjacent free blocks.

More complex allocation methods such as segregated lists were considered but rejected, as they would increase OS size and thus decrease memory efficiency. The chosen design balances simplicity, memory conservation, and fragmentation reduction.

Impact and Benefits

The combination of PIC and the new memory allocator enables dynamic loading, updating, and unloading of applications at runtime, without recompilation or manual memory management. Previously, memory had to be statically divided among applications, leaving little flexibility for runtime changes.

Now, the OS loader can manage application placement dynamically, optimize memory usage, and reduce downtime, significantly improving system flexibility and efficiency. These changes provide a new level of abstraction, allowing developers and users to deploy applications without concern for SRAM addresses or memory fragmentation.

I had a great Summer at ZeroRISC and look forward to diving more deeply into OS in the future!

Conclusion

It was our privilege to host Beshr and we’re grateful for all his outstanding work this Summer!

Beshr’s project demonstrates how system-level software engineering and compiler/runtime integration can enhance MMU-less embedded systems, providing both efficiency and flexibility for secure, multi-application execution.

At ZeroRISC, we believe strongly in the power of open source to fuel innovation and amortize maintenance effort over the long term. So, we are currently coordinating with the Tock team on integrating Beshr’s PIC efforts into the relevant upstream Tock repositories.

While Beshr explored enhancements to ZeroRISC’s Rust-based secure operating system, including position-independent code (PIC) and a flexible memory allocator, our second intern, Yeabsira, focused on hardware and interconnects. Look out for that blog coming out shortly!

ZeroRISC and Tock OS Team Present Tutorial at ACM MobiSys 2025

On July 4th, ZeroRISC and the Tock secure embedded OS development team jointly presented a full-day tutorial on secure firmware design for hardware roots of trust (HWRoTs) using Tock.

This tutorial spotlighted Tock's memory protection, process management, and compiler-derived kernel isolation guarantees, providing a comprehensive view of how Tock can uphold HWRoT security requirements from userspace down to the metal.

On July 4th, ZeroRISC and the Tock secure embedded OS development team jointly presented a full-day tutorial on secure firmware design for hardware roots of trust (HWRoTs) using Tock.

This tutorial spotlighted Tock's memory protection, process management, and compiler-derived kernel isolation guarantees, providing a comprehensive view of how Tock can uphold HWRoT security requirements from userspace down to the metal.

As the primary author of the HWRoT-specific section of the tutorial, I deeply enjoyed the process of preparing and delivering the relevant materials. To make it useful as an onramp for others to learn more about Tock and general hardware security, I’ve put this post together.

We will review what Tock is and why its design is powerful for trusted hardware development, concluding with the highlights of the tutorial we shared. Please review the tutorial available here as part of the Tock Book for a more advanced overview. Following along at home only requires a development board and OLED screen breakout; see the Hardware Setup page in the Tock Book for details.

What is Tock?

Tock OS is a Rust-based embedded operating system designed for securely running multiple untrusted applications at once with strong isolation guarantees. By carefully leveraging embedded memory protection hardware and guarantees provided by Rust’s type system, Tock manages to offer both runtime isolation guarantees for applications, and compile-time isolation guarantees between different parts of the kernel, all while allowing applications to be both loaded on demand and completely untrusted.

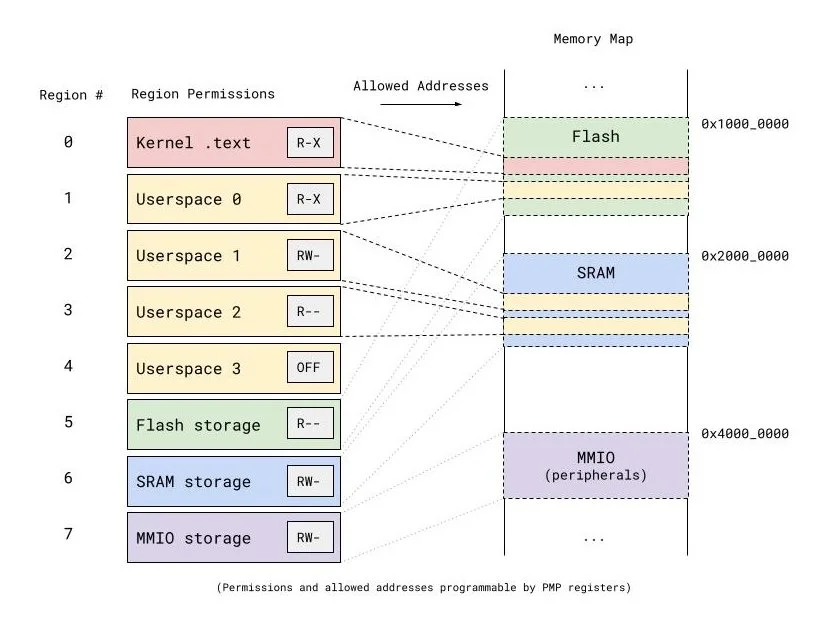

Delving into runtime isolation, nearly all embedded devices have some support for hardware memory protection. Two common examples of such mechanisms are ARM’s Memory Protection Unit (MPU) specifications and RISC-V’s Physical Memory Protection (PMP).

Such mechanisms work by providing a hardware block in the processor with a fixed number of “slots” representing spans of physical memory. Each slot generally has associated permissions, such as:

RW (read-write) for a region of SRAM

RX (read-execute) for a flash region holding application code

RWX (read-write-execute) for a RISC-V Debug Module debug region

These can all be modified via memory-mapped registers. Memory addresses not in any provided region are generally treated as inaccessible. To enforce such a set of permissioned regions, these mechanisms monitor all processor memory accesses and fault the processor whenever the access policy given by these regions is violated.

Diagram illustrating a RISC-V PMP configuration. Each PMP region is a contiguous range of memory addresses which can be configured as readable, writable, and/or executable. If a memory access by the processor falls outside of the policy set by these regions, the processor will fault.

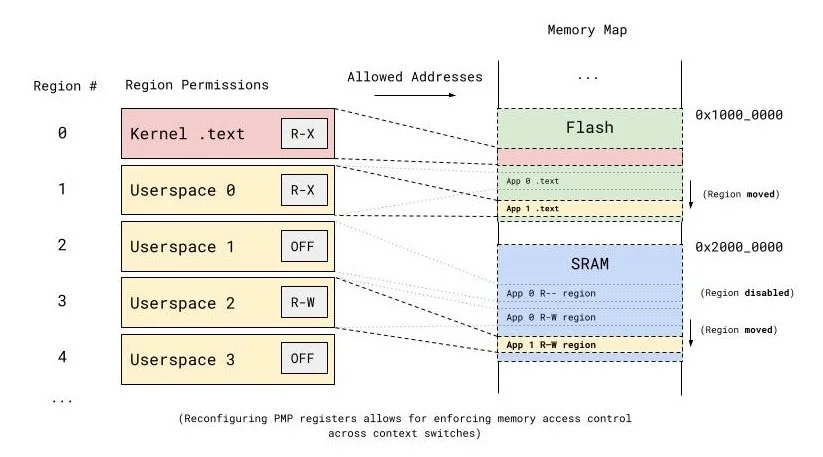

Tock’s context switching code carefully makes use of the memory protection mechanisms in several different processor architectures, limiting applications to exactly the memory they should access without assuming anything about how the application binary was constructed.

Diagram illustrating how the RISC-V PMP configuration is reconfigured on context switch. Since a different process's .text section and allowed SRAM regions will be used, PMP regions need to be shifted and even added/removed correspondingly.

In contrast, Tock’s kernel is designed such that isolation between components of the kernel, called capsules, is given at compile-time. In particular, Tock capsules can only be written using safe Rust, a subset of the Rust language that provides strong type-safety and memory-safety guarantees. In particular, safe Rust disallows constructing references to arbitrary memory, preventing capsules from accessing each other’s data except when explicitly permitted.

Moreover, Tock leverages this fact in several completely novel measures to prevent capsules from faulting the kernel as a whole. One key example is memory grants: rather than use a kernel heap that one capsule could exhaust, applications instead grant access to small portions of their memory via the syscall interface to provide heap space for capsules.

Diagram illustrating Tock's core architecture. Rather than maintain a global heap, Tock allocates an associated "grant" region for each application. This is memory which can be delegated piecemeal by the application to parts of the kernel as heap space, preventing a single kernel component from exhausting the heap for the whole system. (https://tockos.org/assets/img/architecture.png with permission)

In this manner, the portion of a Tock-based SoC that needs to be fully trusted remains quite minimal relative to other embedded OSes. For more details, the Tock website’s Design page is an excellent overview.

What makes a Hardware Platform Secure?

A secure hardware platform is often a subsystem of a larger device that provides some level of cryptographically-enforced trust to a larger system. For instance, a hardware root of trust (HWRoT) is a secure hardware platform responsible for the following:

Securely store cryptographic keys on behalf of the system it belongs to

Allow other subsystems to delegate cryptographic operations

Perform secure boot for the full system

Attest to the present firmware and configuration of the system

Encrypt and decrypting secure storage mediums

Coordinate device firmware updates for the full system

While a general-purpose processor could feasibly perform all of these operations; the key with a secure hardware platform is that these operations are performed in a dedicated, extensively-tested, and hardened subsystem, which can withstand attacks that a general-purpose processor will not.

Depending on the security and standardization requirements of a device, a secure hardware platform might be required to complete the following:

Prevent side-channel attacks by making use of constant-time, masked hardware implementations of cryptographic operations

Resist fault-injection attacks with a hardened processor design and fault detection logic

Detect active tampering using dedicated analog sensors and zeroize data in response

Leverage hardware-backed key stores to prevent direct key exfiltration by firmware

Undergo extensive third-party security review and even formal analysis to provide strong security guarantees

The aim is for other subsystems to be able to confidently rely on the platform’s integrity and confidentiality guarantees, delegating management of sensitive data and operations instead of undergoing the same degree of hardening.

Some notable secure hardware platforms in the wild include:

The general-purpose, open-access OpenTitan Silicon RoT, which comes in both discrete and integrated flavors

Apple's Secure Enclave, the integrated root of trust in iPhone mobile processors

Hewlett Packard Enterprise's Silicon Root of Trust, the integrated root of trust in their out-of-band management chip

Infineon's SLE78 Secure Element, which is used in YubiKey 5 series USB security keys

While these platforms are physical hardware systems, they all rely on firmware in order to make precise, careful use of the secure hardware. Two critical points to note are:

Secure firmware without secure hardware leaves the system open to a variety of hardware attacks, like side-channel analysis and fault injection attacks.

Secure hardware without secure firmware is vulnerable to the same set of firmware-level attacks that a general-purpose processor might be.

As such, a secure hardware platform must be realized as a joint, even co-designed system carefully assembled with the complementary security guarantees of hardware and software in mind. Firmware can make or break the security guarantees of a hardware platform; as such, careful consideration of what embedded OS the system leverages is due.

Why Tock as an OS for Secure Hardware?

As previously illustrated, Tock provides excellent security and isolation guarantees by design while allowing untrusted multi-tenancy, critical for delivering the various complex services a secure hardware platform must provide to its surrounding system.

Moreover, its careful design around the Rust language allows for the elimination of broad classes of memory and type safety violations at compile-time, critical for the delivery of truly secure systems in timeframes appropriate to a fast-moving market.

Yet, Tock serves as an excellent secure hardware platform OS for a more innate reason. Secure hardware isn’t designed as a monolith; typically, a well-designed secure hardware platform will be split into several stages which boot in sequence. In a secure hardware platform like a HWRoT, these form a chain of trust, inductively ensuring the security of the whole system. For example, when performing secure boot:

Boot stage 0 will be immutable (usually as mask ROM)

Boot stage n + 1 will be verified to match a manufacturer-signed hash by boot stage n before handing over control flow

Similarly, for attestation:

Boot stage 0 will be immutable (usually as mask ROM)

Boot stage n will generate a signature of boot stage n + 1’s hash, then lock write access to the certificate for later boot layers

The hierarchical nature of these dual processes allows for reasoning about a small part of the system at a time, allowing for both modularity and a granular permissioning structure. For instance, in a secure microprocessor the boot stages might take the form of

This is where each stage verifies and attests to the state of the next, and

ROM (the mask ROM) is baked into mask ROM

ROM_EXT (ROM extension) lies in flash, and is signed by the chip’s creator for security updates

BL (the bootloader) lies in flash, and is signed by the chip’s owner for everyday firmware updates

Tock’s design and trust model allows for seamlessly extending this chain of trust, instantiating the bootloader with Tock’s boot code and using applications as later boot stages. Because Tock has built-in support for application code signing and can be securely extended to provide application attestation, we can make our chain of trust become

As Tock provides strong compile-time isolation between kernel components, the application verification and attestation components of Tock can be carefully verified and isolated. Similarly, Tock’s design allowing for untrusted, hot-loadable, code-signed applications means that application code signing permissions can be trivially delegated to and revoked from a third-party by the device owner on demand.

Moreover, the kernel components that each application relies on–for instance, a secure signing service might rely on a hardware ECDSA driver–can be easily isolated and verified individually, providing defense in depth within the kernel even when a singular driver might be compromised.

In summary, this makes Tock a viable option for providing a reliable, secure, extensible OS for secure system design.

New Tock Book Section: Tock as a Root of Trust OS

As part of delivering our demo at MobiSys, zeroRISC and Tock OS developers contributed a chapter to the Tock OS Book, Tock as a Hardware Root of Trust Operating System.

This chapter, available here, focuses on building a toy encryption service as a Tock application to mimic the behavior of a small HWRoT like a secure element. From there, the reader is guided through a set of increasingly invasive attacks on the system, highlighting Tock's ability to provide defense in depth in practical applications as highlighted above.

I recommend reviewing this tutorial, and encourage readers to share feedback with us. If you’re interested in learning more, please take a look at our early-access program or contact us at info@zerorisc.com.

Conclusion

Secure hardware platforms are modern examples of where careful simultaneous consideration of hardware and software together is critical to success.

Tock, a modern Rust-based embedded OS, is an excellent example of how an operating system can complement the security guarantees of the hardware it runs on in a seamless fashion. At zeroRISC, we care about delivering strong security guarantees that extend up to the application software our customers rely on, and leveraging modern, secure embedded OS design is a critical part of being able to deliver that in practice.

Future of PQC on OpenTitan

This is part 3 of 3 in an experience report about implementing SPHINCS+ (aka SLH-DSA) for secure boot in OpenTitan root of trust (RoT) chips. SPHINCS+ is a post-quantum secure signature algorithm and one of the four winners of NIST’s post-quantum cryptography competition; the final standard was recently released as FIPS 205.

This is part 3 of 3 in an experience report about implementing SPHINCS+ (aka SLH-DSA) for secure boot in OpenTitan root of trust (RoT) chips (1,2). SPHINCS+ is a post-quantum secure signature algorithm and one of the four winners of NIST’s post-quantum cryptography competition; the final standard was recently released as FIPS 205.

Read part 1 here and part 2 here.

This post will focus on the future of post-quantum cryptography on OpenTitan, specifically:

new SPHINCS+ parameter sets that dramatically improve secure boot tradeoffs, and

potential hardware modifications to support lattice-based cryptography on OpenTitan.

I said the last post would be the longest, but it looks like this one is. Turns out there’s a lot to say about the future!

New SPHINCS+ Parameter Sets

For the OpenTitan Earl Grey chip design, we set up the SPHINCS+ verification so that it’s a configuration option during manufacturing; you can decide to run secure boot using both classical non-PQC verification or using both classical and SPHINCS+. We continued to support the classical-only option because SPHINCS+, although fast enough to be tolerable, was still a few times slower than RSA or ECDSA. Specifically, SPHINCS+ with the shake-sha2-128s parameter set takes about 9.3ms on Earl Grey when clocked at 100MHz, compared to about 2.4ms for RSA-3072 and 4.2ms for ECDSA-P256, which provide about the same level of security.

This performance picture is about to change. The paper A note on SPHINCS+ parameter sets (2022), authored by Stefan Kölbl (one of the SPHINCS+ authors) and myself, explores new parameter sets that are better suited to firmware signing. As I described in the first post of this series, SPHINCS+ is a signature framework; it has several settings that you can tweak to get different characteristics. The NIST PQC competition required that all submissions support up to 2^64 signatures per key. This is so many signatures that, practically speaking, one can simply never worry about counting. For many applications, this is a pragmatic choice to reduce complexity and risk, especially when the same key may be used by multiple entities. For firmware signing specifically, the context is different; the signing environment is tightly controlled, and signing generally won’t happen more frequently than once per month in practice. In this context, 2^20 signatures are more than enough; that’s enough to sign once per month for 87,000 years, or once per day for 2,872 years. Even 2^10 signatures is enough to sign once per month for 85 years.

And if you exceed the number of signatures needed for the target security level, the characteristics of SPHINCS+ are such that the security level drops off very gradually; you would retain practical security, over 100 bits, even after signing over 1000x more than you should. This is a strong contrast with the LMS/XMSS signature schemes, where practical security is immediately lost if the state is mishandled even once.

So the question was: if the maximum number of signatures was relaxed, what new possibilities would that open up in the tradeoff space for SPHINCS+ parameters? Stefan built a way to automatically search through the parameter space (on GitHub: kste/spx-few/), and was able to map the landscape with detailed graphs like this one:

This was a promising result; targeting the same security value but a lower maximum number of signatures, it was possible to significantly reduce signature size without performance sacrifices. Optimizing for verification performance (since these parameter sets by definition target contexts where signing is infrequent) and signature size, the paper proposes effectively six new parameter sets, one each for the “s” variants in FIPS 205. The new parameters are called “Q20” in reference to the 2^20 signature bound, so the analogue of shake-128s from FIPS 205 is shake-128s-q20. The other signature framework parameters don’t change when the hash function changes, so sha2-128s-q20 is exactly the same as shake-128s-q20 except for the hash function.

OpenTitan was the case study for firmware signing in the paper, due to its combination of production quality implementation and open-source availability. I ran benchmarks for several of the new parameter sets using our secure boot implementation. For shake-128s-q20, which is the security level we’d most likely target, we saw a whopping 58% decrease in signature size and a 79% reduction in verification time.

The branch with the benchmarking scripts and reproduction instructions is available at jadephilipoom/opentitan:spx-benchmark

That speedup is enough to make SPHINCS+ as fast or faster than classical, non-post-quantum cryptography. On OpenTitan, that’s nearly as fast as RSA, and significantly faster than ECDSA at the same security level (note that the ECDSA number is slightly outdated, it’s now more like 420K cycles; we’ve made some speed improvements since the paper benchmarks were measured).

Although the signature size is still larger, it’s now only 4x larger than RSA’s combined public key and signature size (as discussed in the first post, it’s the sum of the two that really matters). The existing FIPS 205 parameter set is nearly 10x larger. This is a huge improvement to the tradeoff space of working with SPHINCS+ for firmware signing.

Now that we have an implementation for SHA-2 parameters rather than SHAKE, I can add some new benchmarks, shown here for the first time:

As discussed in the previous post, the SHA-2 parameters are faster on OpenTitan because the SHA-2 accelerator hardware implementation has less power side-channel hardening than the SHAKE accelerator. For secure boot, where we only do verification and therefore never handle secret data, we don’t need the hardening, so the speed is a free advantage. With SHA-2 and the Q20 parameters, SPHINCS+ is in fact significantly faster than RSA, and more than twice as fast as ECDSA, making it a very practical choice for the boot process despite the large signatures.

We’re very enthusiastic about these new parameter sets, which were presented at the 5th NIST PQC Standardization Conference in April 2024. At the same conference, NIST announced that they indeed plan to standardize some parameter sets for smaller numbers of signatures, in a separate publication from FIPS 205. We strongly support the standardization of reduced-maximum-signature parameter sets. Standardizing them will help hardware projects like ours roll out PQC quickly and effectively, a necessary precondition for the PQC migration of any system that relies on secure boot.

Hardware Acceleration for Lattice Cryptography

Hardware security means more than just secure boot. In some cases, we might want to be able to run alternative post-quantum signature algorithms on OpenTitan, especially for cases where we need to compute a signature (rather than only verify one). Signing speed, for example, is not a strength of SPHINCS+. Also, some of the data we handle in signing and key generation is secret, so side-channel attacks (e.g. power and timing) are in scope. Defending against these side channels is probably well within reason if we use the SHAKE parameter set, since that has a masked hardware implementation.

Another concern for signing is fault injection, and this one is trickier for SPHINCS+. I touched on these in the last post; it means that the attacker uses a laser or other means to deliberately insert a glitch during computation. The 2018 paper Grafting Trees: a Fault Attack against the SPHINCS framework described an attack that essentially causes SPHINCS+ (and several other related schemes) to reuse an internal one-time signature. The resulting faulty signatures pass verification, but reveal information to the attacker that, with enough signatures, allows them to create forgeries. The attack was experimentally verified shortly after being published, and a recent analysis confirms that the only real defense is redundancy. In other words, we would have to perform each signature twice or more to protect against this scenario. Given that signing is already pretty slow, this isn’t ideal for something we might have to do relatively frequently. It’s still viable to do SPHINCS+ signing and key generation on OpenTitan, and I believe we should support it. However, it would be good to support alternative post-quantum signatures as well.

Dilithium or Falcon?

So, what are the other options? Besides SPHINCS+, the other two signature algorithms that won the NIST competition are Dilithium (aka ML-DSA) and Falcon (aka FN-DSA). Here are some relevant benchmarks for ARM Cortex-M4, courtesy once again of the excellent pqm4 project. I’ve highlighted some particular measurements that are likely to be either challenging (yellow) or near-impossible (red) to accommodate during boot for an embedded system like OpenTitan with limited memory:

* Can also be stored as a 32-byte seed.

** Falcon signing is many times slower than this without floating-point instructions (this excellent and informative blog post from Bas Westerbaan at Cloudflare estimates about 20x slower).

As discussed in the first post of this series, I am using parameter sets here that aim for a higher security level than for SPHINCS+. This is a hedge against future cryptanalytic attacks potentially weakening lattice signature schemes, since they are newer and less well-understood than hash-based cryptography.

A few observations we can make based on the pqm4 measurements:

Dilithium signatures are almost 3x larger than Falcon ones.

Falcon key generation and signing are many times slower than Dilithium, especially taking into account that the current OpenTitan designs do not have floating point instructions.

The stack size required by the “clean” implementations is probably not feasible for an embedded system; we will need to use stack-optimized versions, and pay the price in code size and Dilithium signing speed.

Falcon code size is much larger than Dilithium.

We can likely accelerate Dilithium more than Falcon, because a much higher percentage of its computation is SHAKE hash computations that can use our hardware SHAKE accelerator.

Taking all of this information together, it’s clear that Dilithium is a more appealing option than Falcon for OpenTitan, at least with the current design. The code size alone for stack-optimized Falcon is probably disqualifying; that’s more SRAM than the current Earl Grey OpenTitan chip has. Besides that, the key generation and signing times are quite slow, even if we took the big step of adding floating-point instructions, and accelerating hashing won’t get us very far in speeding it up.

Another advantage of Dilithium is that the secret keys are derived from short seeds. This opens up the possibility to generate the secret keys from OpenTitan’s hardware key manager block. The key manager block maintains a secret internal state and performs key-derivation operations to generate key material that it loads directly into hardware blocks, for example the OTBN coprocessor that we currently use to accelerate ECDSA and RSA operations.

What about Kyber?

The fourth algorithm selected in the NIST PQC competition is Kyber, aka ML-KEM. Kyber is not a signature algorithm; instead, it is for “key encapsulation” and allows two parties to exchange information such that they end up with a shared symmetric key. This is an extremely useful operation; for example, TLS uses an exchange like this to set up an encrypted connection between a website’s server and a user’s browser. Once you do the key exchange operation, you can use fast “symmetric” cryptography like AES to encrypt data. Symmetric cryptography is post-quantum secure already, so there’s no need to change much here or accept performance penalties.

Although we don’t need Kyber at the moment for any of OpenTitan’s core device functions, it’s an algorithm that we expect high demand for, and it would be good to have a hardware architecture that supports it. Luckily, many of the underlying structures of Kyber are very similar to Dilithium, so we can consider them together.

The Design Space

So the next question is, how easily can OpenTitan (or, more precisely, a future hardware instantiation of OpenTitan) support efficient Dilithium and Kyber operations, and what challenges will we face there?

For the answers we can look to a pair of recent papers: Enabling Lattice-Based Post-Quantum Cryptography on the OpenTitan Platform (2023) and Towards ML-KEM & ML-DSA on OpenTitan (2024, pre-print – for full disclosure, I’m one of the authors). The first paper evaluates a more hardware-focused approach to supporting lattice cryptography, extending the OTBN coprocessor with a new post-quantum ALU and specialized instructions (3). It focuses on verification operations. The second paper evaluates four different hardware and software implementations of Dilithium and Kyber with OpenTitan’s OTBN coprocessor:

Unmodified OTBN ISA and hardware design, but without OTBN’s current memory constraints

Same as (1), but with a direct connection between OTBN and KMAC hardware blocks

Same as (2), but with the OTBN ISA extended with five new vector instructions

Same as (3), but with the hardware implementation of the new instructions optimized for speed instead of area.

Side channel defenses are also not in scope for either of the other papers, and will probably incur a significant cost in terms of code size, stack usage, and runtime. Luckily, the KMAC hardware block already includes these defenses, so the cost would only apply to the non-hashing computations. Although it’s difficult to estimate the exact cost of side channel countermeasures, it’s important to keep this in mind and leave a little bit of slack in the stats to accommodate the cost of side channel defense.

Memory optimizations would be necessary for our embedded context, and are not completely in scope for the above papers. However, from Dilithium for Memory Constrained Devices (2022) and the pqm4 measurements we can get a decent idea of the amount of slowdown we would have from stack optimizations, and how much we could bring stack usage down. This paper also helpfully optimizes for code size, supporting all 3 Dilithium variants in around 10kB of code.

Together, this research helps us get a sense of the tradeoff space available, primarily between hardware area, stack usage, and speed. Much thanks to all of the researchers involved; it’s amazing to be able to reference all of this information instead of guessing! This is also a great example of how an open source hardware project can benefit from external researchers having the ability to experiment.